In the latest 2023 edition of Understanding Quantum Computing 2023, I proposed a framework to analyze the quantum computing case studies that are flourishing nowadays.

It is located in the book just before 57 pages listing various case studies in 16 vertical markets (healthcare, energy, chemistry, transportation, logistics, retail, telecommunications, finance, insurance, …). These pages contain 239 scientific or review papers related to these various market case studies.

When you discover an analyst or IT vendor white paper or an academic review paper showcasing how quantum computing will transform your industry, it is usually written using the present tense, showcasing many practical case studies, sometimes with their scientific references.

Meanwhile, so far, no corporation has deployed these solutions. What explains this discrepancy? How can you assess these case studies’ readiness for business grade applications? It is a new challenge that I try to address here. We’ll look first at the conditions that could enable quantum computing to add some competitive value compared with classical computing and then, at the ways to classify and assess case studies.

Most of the time, these case studies are misleading us about the reality of a potential quantum advantage. Various tricks are used to confuse the audience between the present and the future, on the nature of the quantum advantage and on the classical references used in comparison.

This paper is a more in-depth version of the book text with the addition of more examples and comments.

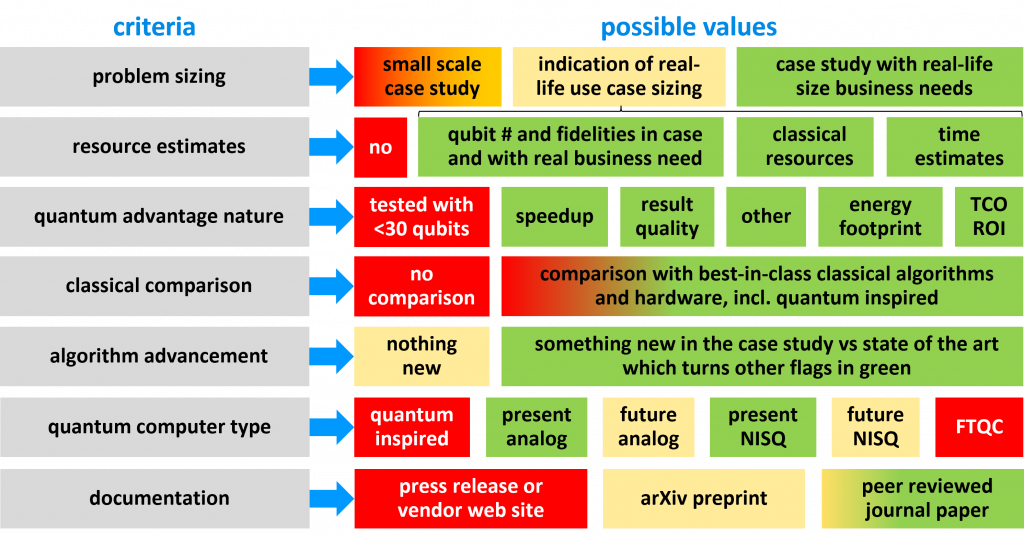

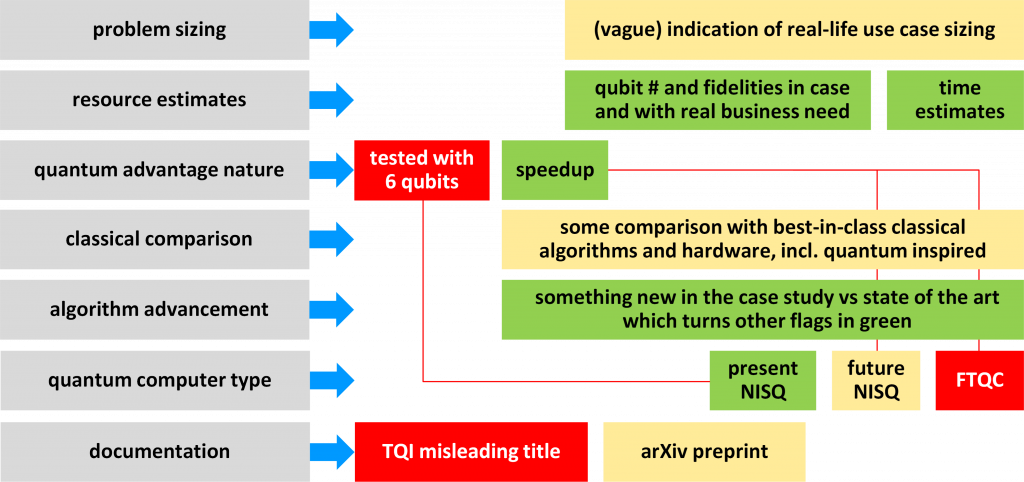

The proposed framework contains a set of questions to ask when reviewing case studies and color codes showing potential red flags making a case study moot or green flags making it relevant, in the present or in the future. We’ll call it the PReQaCAQD framework :). You pronounce it the way you want! The marketing readiness of this acronym is somewhat synchronized with FTQC (fault-tolerant quantum computers) availability.

Red flags indicate key missing points in the case study making it irrelevant to show any advantage for quantum computing for the given business problem. Orange flags are intermediate situations but not real showstoppers. Green flags are indications of a serious use case. Quantum inspired use cases are not using quantum computing and therefore should not be presented as “quantum computing use cases”. TCO means total cost of ownership, an economic concept coming from the classical information technology world and ROI, return on investment, all being compared to 100% classical solutions.

In the following text, NISQ refers to existing or near-term noisy intermediate scale quantum computers, whether using gate-based programming (ala IBM, Rigetti, IonQ, etc) or analog quantum computing (ala D-Wave or Pasqal/QuEra). FTQC are future fault-tolerant quantum computers using “logical qubits” made of many physical qubits, which have a much better quality (or lower error rates) than their underlying physical qubits. It will enable, when available, the execution of larger (more qubits) and deeper (more gates) algorithms.

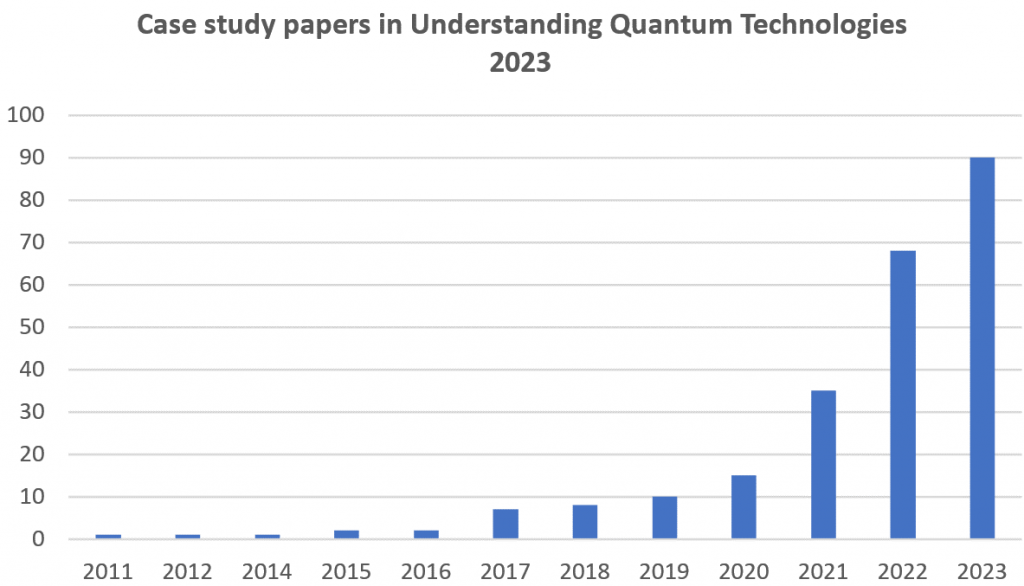

Below is a chart with the case studies papers in my book per publishing year, showing a good growth trend. This significant number of papers helped me craft the proposed framework.

In the current literature, there are indeed very few papers explaining how to assess case studies. I just recently found Biology and medicine in the landscape of quantum advantages by Benjamin A. Cordier et al, November 2022 (31 pages) which provides some guidance to assess case studies in the healthcare market. But there is no such paper for generic case studies analysis.

1/7 – Problem sizing

![]()

One key aspect of a case study is the sizing of the problem to solve. Is the conducted experiment toying with real life data and matching usual business needs or just a small scale prototype? You have to know about the scale of the problems businesses are trying to solve more efficiently with quantum computers. It deals with the size of the data and the number of parameters or variables of a problem, like a number of assets in a financial portfolio, the number of cars and travel steps to optimize, or the size of an organic molecule to simulate. Most existing case studies are using small scale data. In that case, it should be complemented with some indications on the real size quantitative scenario needs.

Many current quantum computing case studies happen to be about quantum many body physics simulations (Fermi-Hubbard models, ferromagnetism, spin-glass, etc.). The domains are usually cryptic for the profane and knowing if the solved problem corresponds to some useful case in the industry requires some investigation. See for example Observing ground-state properties of the Fermi-Hubbard model using a scalable algorithm on a quantum computer by Stasja Stanisic, Ashley Montanaro et al, Phasecraft and University of Bristol, Nature Communications, October 2022 (11 pages).

Current case studies empirical distribution is about 49% in the first category (small scale with no indication of real-life scale scenario needs), 49% in the second category (small scale + indication of real-life use case sizing) and nearly zero (<2%) in the last category corresponding to real-life present use cases. Most of the times, these 2% cases are not well documented and suspicious (see the last criteria on the way a case study is documented).

2/7 – Resource estimates

![]()

A good case study presents estimates of the classical and quantum computing resources needed to solve a given real-world problem and its relation to the real-life problem sizing defined above. It is even more important when the solution was only tested with a small sized problem. You need to have some clues on the size and type of quantum computers which could be able to solve a real-life problem.

When looking at the details:

- NISQ case studies should evaluate the algorithm depth and breadth, the number of shots and the cost of classical computing for all variational algorithms. Are qubit numbers and fidelities numbers mentioned in case study and for its extension to a real business need sizing?

- FTQC case studies should highlight the number of T gates which correspond to the bulk of quantum error correction overhead and to the logical qubit fidelities target. Coupled with the physical qubit quality and connectivity, it leads to assessing the number of physical qubits. It may be different if the target platform uses the MBQC paradigm, using photonic cluster states. There are useful resource estimates tools for FTQC algorithms like Microsoft’s Resource Estimator, described in Assessing requirements to scale to practical quantum advantage by Michael E. Beverland et al, Microsoft Research, November 2022 (41 pages).

- Analog quantum computing case studies should also be associated with some data on the hardware requirement to obtain some quantum advantage like a number of qubits and their topologies for a quantum annealer and a quantum simulator.

Most case studies scientific papers are hard to decipher, particularly with their complicated charts. Some charts are misleading when extrapolating current small scale performance to a larger scale system, without enough theoretical or practical grounds.

In all cases, all the classical resources involved in the case study should be documented, whether in NISQ or FTQC mode. All algorithms require some classical data and code preparation and mostly all NISQ algorithms use a variational technique engendering a large classical computing overhead. FTQC algorithms have, at least, a significant (classical side) quantum error correction overhead that is architecture specific. Not only is this classical resource important to know, but its weight and share in the entire solution is also an important indicator. The advantage may be there and not in the (tiny) part of the algorithm that is run on a quantum computer with a few qubits. This is the case with Constrained Quantum Optimization for Extractive Summarization on a Trapped ion Quantum Computer by Pradeep Niroula et al, June 2022 (16 pages) from JPMorgan on a natural language processing solution running with 14 and 20 qubits, but with a lot of data ingestion and preparation done classically.

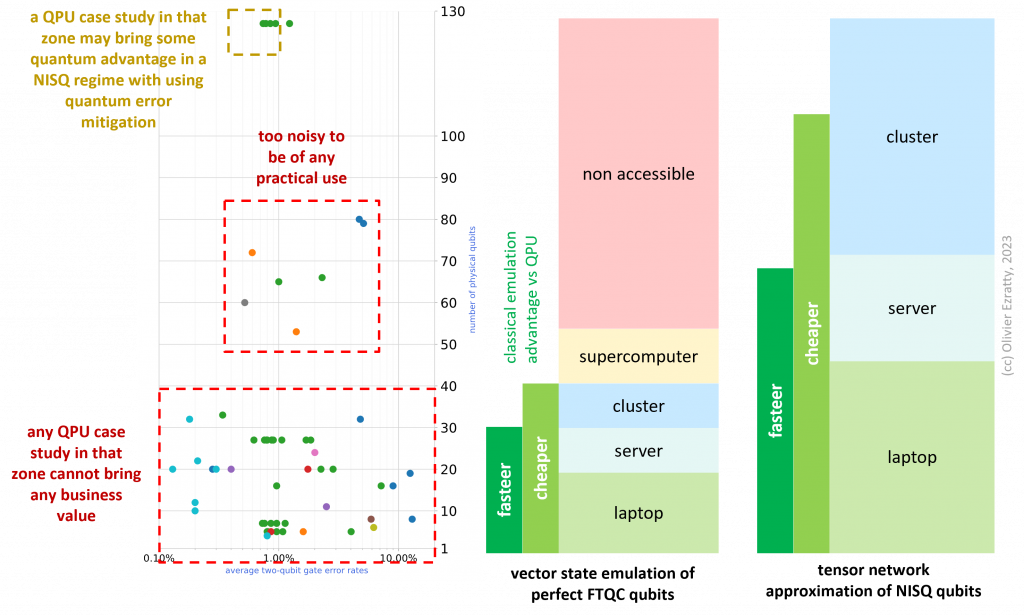

Indeed, if the solution was tested on fewer than 20 qubits, you can consider it as a classical solution that could run unchanged, faster and cheaper on your own laptop! This is the case for most of the gate-based use cases. Under 30 qubits, it can run on a classical server cluster. Over 30 qubits, its emulation would require a larger system and run slower in some circumstances.

A bad example is Quantum Algorithm for Maximum Biclique Problem by Xiaofan Li et al, September 2023 (14 pages) which proposes to find a maximum “biclique within a given bipartite graph”, showing a polynomial speedup, with potential use cases in ecommerce, social network recommendations and biology, but with no indication of the qubit and time resources needed to solve practical problems.

A better example comes from Developing industrial use cases for physical simulation on future error-corrected quantum computers by Nicholas Rubin and Ryan Babbush, Quantum AI, October 2023 which describes honestly various FTQC use cases to solve various physical simulations problems. The results are mind blowing. Fault-tolerant quantum simulation of materials using Bloch orbitals by Nicholas C. Rubin, Ryan Babbush et al, PRX Quantum, February-October 2023 (52 pages) provides resource and time estimates, in the hundreds of thousand logical qubits and thousand years. Quantum computation of stopping power for inertial fusion target design by Nicholas C. Rubin et al, August 2023 (37 pages) lists a need for 5,650 to 33,038 logical qubits for simulating various settings like the interactions between protons and deuterium in fusion reactors. The use case also shows a number of Toffoli gates in the 1014 to 1020 range. At last, Reliably assessing the electronic structure of cytochrome P450 on today’s classical computers and tomorrow’s quantum computers by Joshua J. Goings, Craig Gidney et al, Google, PNAS, February 2022 (24 pages) describes the need to simulate the cytochrome molecule. With physical qubits (two-qubit gate) error rates of 0.1%, the run time would be 73 hours and require 4.6M physical qubits. With an error rate of 0.001% which is currently way out of reach, computing time could be down to 25 hours with using “only” 500K physical qubits. The equivalent classical computing time is 4 years and requires 348 GB RAM and 2TB of storage. In Even More Efficient Quantum Computations of Chemistry Through Tensor Hypercontraction by Joonho Lee, Craig Gidney, Nathan Wiebe, Ryan Babbush et al, PRX Quantum, July 2021 (62 pages), the same Google AI team estimated the resources needed to compute the ground state of FeMoCo (4 million physical qubits with an error rate of 0.1%), although this wouldn’t directly turn, as usually said, into finding a way to improve the infamous Haber-Bosch industrial process used to produce ammonia out of nitrogen.

All in all, I have observed that FTQC case studies are better documented, like with the above example. In all cases, though, classical resources are not well documented.

3/7 – Quantum advantage nature

![]()

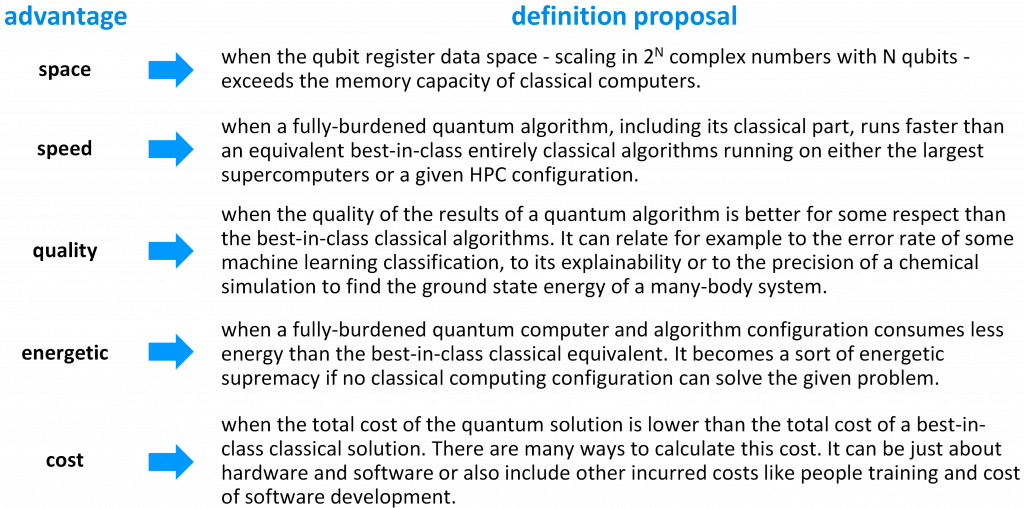

What is a quantum advantage? For a long time, it has been defined as a situation where a computing speedup can be observed between a quantum hardware and software combination, and a best-in-class classical solution. And preferably, this speedup was to be exponential, leaving in the dust of uselessness any classical computing solution for solving large scale problems. That is the speed advantage. But a speed advantage must be practical and not just theoretical. Do not confuse a speedup and an actual computing time. Complexity theory classifications are interesting for theoretical purposes but are useless if the actual computing time to solve a given problem exceeds a human lifetime! And any comparison should include all the classical computing involved in any quantum computing solution. This corresponds to the notion of quantum utility as presented in Milestones on the Quantum Utility Highway by Catherine C. McGeoch and Pau Farré, D-Wave Systems, May 2023 (34 pages).

A quantum computing speed advantage is also frequently confused with the exponential space advantage of quantum computing. It is generated by the large quantum state space of N qubits that has an exponential size of 2N complex numbers. This large “data handling space” is not sufficient to generate a polynomial or exponential speedup even though, when N>55, this data space cannot be fully emulated on a classical computer due to memory constraints. If the case study has been tested with fewer than 30 qubits, you can be sure that it does not bring any speed advantage with classical computers, even a simple server.

A quantum computing advantage can also be qualitative when the generated result is somewhat better than its classical counterpart, without necessarily having been produced faster. This can happen for example with machine learning when it needs less training data or produce classification and prediction models with fewer errors. Here, you should also make sure that this qualitative result is not accessible to classical computers. For example, if the case study quantum code runs on fewer than 20 qubits, it is the equivalent of a classical algorithm improvement that could run faster on your own laptop. It would be a red flag.

You may also trade quality for speedup, typically with the typical heuristic based approaches used in quantum computing, particularly in NISQ regime with variational algorithms. Comparisons may be touchy when you compare apples and oranges, like the best solution on one hand and one non optimal solution on the other hand.

Then, we have the energetics of the solution. It is becoming impossible to avoid considering this aspect when deploying new technologies. A quantum computing solution may provide some energetic advantage compared to classical solutions, but it remains to be proven on a case by case basis, at least at the quantum computer architecture level.

Finally, the total cost of ownership (TCO) of the new quantum solution must be evaluated. This cost includes all incurred expenses to deploy a solution: classical and quantum hardware, software, cloud, services, and training related costs. Studies are not yet available at this point given the immaturity of the technology. Sometimes, you find out that some quantum computers have ridiculously high catalog prices up to tens of millions of dollars. In that case, you are better off with using cloud computing resources. You can then mix TCO with ROI (return on investment) considerations, given all cost and value considerations must be compared to classical legacy solutions (see the related criteria below).

The quantum utility terminology can be used to mix these various combinations of quantum benefits. Here is a breakdown of a quantum advantage when comparing quantum and classical quantum settings. When making any comparisons, quantum settings should include all their surrounding classical computing environments. Also, a comparison can be made with either the largest supercomputer in the world or with a smaller classical computing setting, like a mid-size HPC system. In the end, the business benefit will come from a given balance of cost-speed-quality benefits and trade-offs.

One bad example is Quantum Computers Can Now Interface With Power Grid Equipment, NREL, July 2023, which deals with some partnership between NREL and Atoms Computing. They tout having put a quantum computers in the loop for grid optimization. In reality, it was a digital quantum emulator of the Atoms Computing QPU. So, we are dealing here with a classical solution, not with a real quantum computer! And the solution is probably not really deployed in production, but only tested at a small scale.

Another one is Practical application of quantum neural network to materials informatics: prediction of the melting points of metal oxides by Hirotoshi Hirai, Toyota, October 2023 (20 pages) which found some practical application of quantum neural networks in the automotive industry… with only 10 qubits. The paper doesn’t provide any indication whether the test was run in a emulator or a real quantum computer. It may mean that is was probably only a classical emulation.

4/7 – Classical comparisons

![]()

We have previously mentioned the need to take into account all classical computing resources used in a quantum computing case study.

Now, a case study should compare this classical+quantum solution with an entire classical solution. There must be an honest and up-to-date comparison between the proposed quantum solution and classical equivalent solutions. It must rely on using the state-of-the-art hardware (HPC, GPUs, …) and software (algorithms, heuristics, tensor networks, …). The comparison must not mix apples and oranges between exact solutions and heuristic approximate solutions that would favor one side of the comparison, or it should be clear. Also, approximate classical solutions can be “good enough” for most business needs and not require a better optimized solution.

In the case where the quantum computing solution can be easily emulated on a classical system or was only emulated, it will mean there is no quantum advantage at all. It is even worse when the case study was implemented in an emulator. It does not mean it would scale well, whether on a NISQ or FTQC quantum computer.

The chart below exemplified this. In most existing case studies, the classical emulation of the solution is cheaper and faster than the quantum version. The chart is probably very kind to QPUs for the tensor network comparison. And this doesn’t take into account “quantum inspired” tensor-based classical solutions, outside of the emulation realm. The chart on the left is showing existing commercial QPUs with their qubit numbers and two-qubit fidelities. A more complete version, with company names and trajectories, is in the quantum error correction section of Understanding Quantum Computing 2023.

5/7 – Algorithm advancement

![]()

Was there something new in the case study vs the state of the art in both classical and quantum computing? Where was the progress? Does it have some business consequences?

This is actually the less important criteria. It is interesting to assess the advancement in algorithmic work by academic researchers. If a “business” case study works well and is well documented, and it is using an existing algorithm or a combination of existing algorithms, all will be fine.

Also, this should take into account classical algorithms advancements that are comparison points with quantum algorithms.

You may find case studies with generic algorithms not associated with a given business case. That was what IBM did with its kicked Ising model paper in June 2023, touting a “quantum utility”, but with no clear link between this generic problem formulation and how it could be used in a corporate business case like, for example, solving some optimization problem. Seen in Evidence for the utility of quantum computing before fault tolerance by Youngseok Kim, Kristan Temme, Abhinav Kandala et al, IBM Research, RIKEN iTHEMS, University of Berkeley and the Lawrence Berkeley National Laboratory, Nature, June 2023 (8 pages).

It is the same with many generic chemical simulation algorithms where the link with a real-life chemical case that is not addressed with classical solution is proposed.

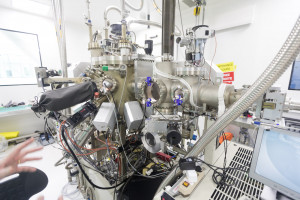

6/7 – Quantum computer type

![]()

Quantum case studies belong usually to one of these categories from being implementable in the short term to the very long term:

- Classical emulation (not shown in the graph) does not demonstrate any quantum advantage whatsoever if it is not complemented with resource and computing time estimations for a real quantum computer execution. It is also an indication, if this comes out of some hardware vendor, of the immaturity of their hardware platform. Also, an emulation is frequently using a state vector emulator mimicking perfect qubits. With real qubits, results are worse. And it gets even worse with more qubits.

- Quantum inspired solutions. These solutions may improve the state of the art of existing classical solutions, but they are not quantum per se. They benefit from advances in tensor network algorithms (MPS, DMRG) and from CPU/GPU and HPC hardware performance improvements.

- Present Analog quantum computing solutions which can run on existing analog systems like a D-Wave Annealer of a Pasqal/QuEra quantum simulator. At this point in time, these are the most powerful available solutions, but none have yet reached some quantum advantage.

- Future Analog quantum computing solutions which require a future analog computer with a larger number of qubits and/or better connectivity. These hardware offerings are presumed to show up in less than 5 years to support over 300 workable qubits in the case of analog quantum simulators.

- Present NISQ solutions which are being tested on an existing quantum computer and can usually be also tested in emulation mode on a classical computer. Their business value is usually minimal, and they usually showcase no quantum advantage in whatever category. Cautiousness is required here.

- Future NISQ solution for a future quantum computer which may bring some quantum advantage. The related hardware may show up in the short term (IBM’s Heron with 133 qubits with 99.9% fidelities) or in a longer term (higher fidelities and even more qubits).

- FTQC solutions with the capacity to support from a hundred to thousands of logical qubits and the related fidelities matching the algorithm breadth and depth. These QPUs may be available some day in the longer term, probably beyond 10 to 20 years. It is also important to have an idea of the requirement FTQC hardware with documented resource estimates. There’s a big gap between having 100 and 4,000 logical qubits, particularly given the required physical resources are scaling nearly polynomially here to ensure adequate logical qubits fidelities.

The color codes in the chart are easy to interpret: green is working today, orange may work in the near term (meaning… let’s say, in less than 5 years), and red is either not quantum (quantum inspired) or in the far fetch future (>15 years). For that respect, beware of any paper starting with “Towards quantum advantage…” or containing “Early quantum advantage”.

We may add a scale factor here. A future NISQ may be very futuristic if for instance, it requires 400 physical qubits with 99.999% two-qubit gate fidelities. An FTQC quantum computer needed for executing a given algorithm may also require from 100 or tens of thousand logical qubits, which is not the same. Getting a mere 100 good logical qubits could even be at least in the 10 to 15 years timeframe according to many vendor’s roadmaps.

7/7 – Documentation

![]()

The last criterion for validating a case study is how well it is documentated.

Is it provided only with a vendor or customer press release and web site page (red flag), with an arXiv preprint (better) or with a peer-reviewed journal paper (much better, although not necessarily safe, read the paper conclusions carefully)?

The more scientific details and data you have on the case study, the better. It may take time from the vendor/customer press release being published and some related scientific publication. So, patience will be a virtue here. In many cases, you will find a significant discrepancy between the press release or the way medias are showcasing it and the underlying scientific documentation.

There are actually different sources of “case studies”:

- An industry case study usually published by an end-user corporation and, most of the time, some quantum hardware, software and/or service partner. These are the ones that are in sight in this post.

- An algorithm paper not directly linked to an industry case. It can come out of some academic research group but also from vendors and even end-user customers. In my book, such papers are referenced in the “algorithms” section, not in the “Quantum computing applications” section. The proposed framework can still be used to analyze the relevance of many of these papers.

- A perspective or review paper of use cases in an industry sector or domain in an industry sector. For example, in healthcare, we have papers on health and medicice use cases, drug discovery applications, drug design, molecular biology, protein design, medical imaging, and cell-centric therapeutics, and, elsewhere, in industrial chemistry. These review papers are usually informative but rarely cover the various assessment criteria described in this post.

Case studies examples

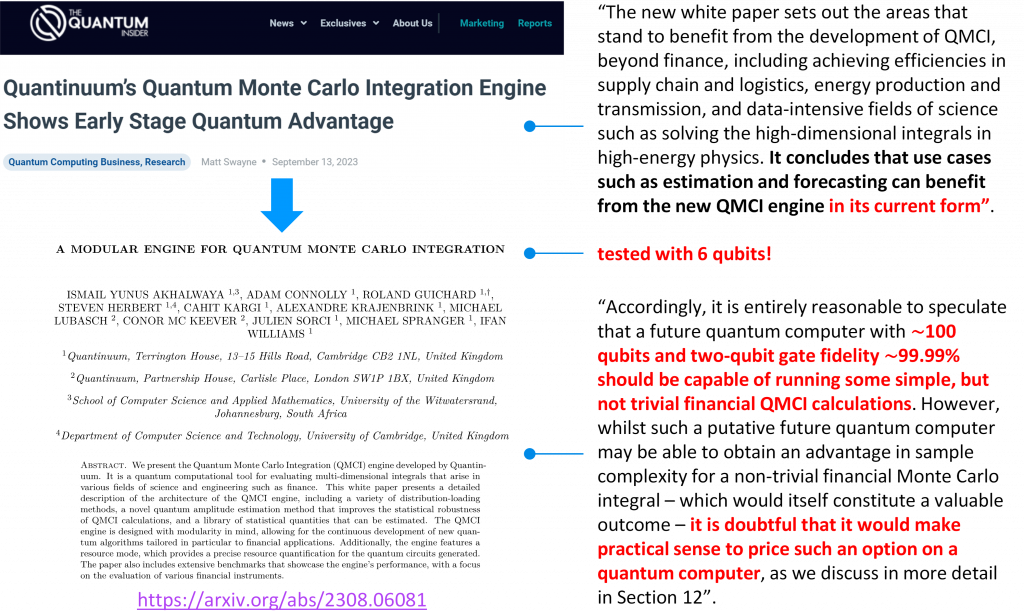

We will use an example to assess a case study from Quantinuum that was presented in The Quantum Insider in September 2023 as showing “early stage quantum advantage”. See Quantinuum’s Quantum Monte Carlo Integration Engine Shows Early Stage Quantum Advantage by Matt Swayne, TQI, September 2023.

It is not clear what that means exactly. When looking at the corresponding arXiv paper, A Modular Engine for Quantum Monte Carlo Integration by Ismail Yunus Akhalwaya et al, Quantinuum, August 2023 (87 pages), we clearly see that we are not yet in any form of quantum advantage. Indeed, the tests of this new multipurpose Monte Carlo engine were implemented with using only 6 physical qubits, far from any quantum advantage since it can be emulated on your smartphone and laptop, probably faster than on any quantum computer. The engine contains a resource estimation tool which shows that an “early” quantum advantage would require at least 100 qubits with 99.99% two-qubit gates fidelities. It is far from what is available today, whatever the qubit technology. Right now, at best, we have about 99.6% two-qubit gate fidelities for fewer than 40 qubits.

An assessment of the Quantinuum QMCI engine paper using our case studies evaluation framework is shown below. All in all, this is a new engine that may provide some quantum advantage but in future NISQ and FTQC QPUs, not those that are available as of 2023.

It is however much better than Characterizing a non-equilibrium phase transition on a quantum computer by Eli Chertkov et al, Quantinuum, November 2022 (25 pages) which is a 20-qubit use case, with no real quantum advantage.

A good case study example is Financial Risk Management on a Neutral Atom Quantum Processor by Lucas Leclerc et al, CACIB, Multiverse, IOGS and Pasqal, December 2022 (17 pages) in which CACIB (France) along with Pasqal and Multiverse (Spain) worked on a “fallen angel” detection for loans risk mitigation. Their training dataset was using real life bank data. The case is based on a binary classification task using a QUBO formulation and random graph sampling. It was compared with classical tensor network based algorithm. A quantum advantage was estimated to require about 150-342 neutral atoms for precision outcome with linear extrapolation and 2,800 with the subsampling method. The actual test on a Pasqal machine went up to 60 functional neutral atoms.

Here, we thus fullfill well criterias 1, 2, 3, 4 and 7. Criteria 6 (quantum computing type) is faring well given the required system to obtain a quantum advantage is not positioned (theoretically) too far in the future like with thousand logical qubits FTQC systems.

Conclusion

This post will help you “see through” quantum computing case studies in an educated way. It will help you craft an adoption roadmap of quantum computing in the near, mid to long term. It may also help you prepare your questions when meeting with quantum hardware and software vendors. Since the field is advancing rapidly, you may need to revisit your roadmap every year!

If you have interesting case studies to submit which illustrate how to use the proposed framework, send it to me and I will add them to this post.

![]()

![]()

![]()

Reçevez par email les alertes de parution de nouveaux articles :

![]()

![]()

![]()

Articles

Articles